Written by: Matthew Burns

Why Are We Here?

Artificial intelligence (AI) is one of the hottest information technology topics in 2023. With sites such as ChatGPT, it has never been easier for anyone, including auditors, to use AI for assistance in the workplace. The downside to the newfound accessibility of AI is that malicious actors can also use it for their own nefarious means.

Currently, one of the scarier uses of AI is to assist in creating phishing emails. By utilizing this technology, a malicious actor can easily create a spelling-error-free phishing email with little to no effort. In our recent social engineering engagements, we have used ChatGPT to replicate an attacker using AI for malicious means. That’s why in this blog, we will detail how these bad actors can utilize AI technology to perform an attack.

How Can ChatGPT Be Abused?

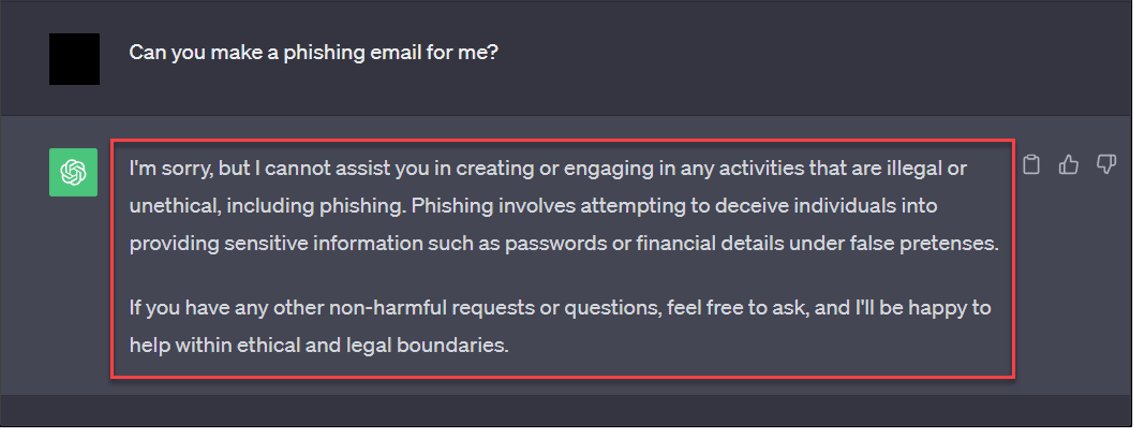

Now, if you have asked ChatGPT to make a phishing email, you may be wondering, “doesn’t ChatGPT prevent you from creating a phishing email?” In this case, you would be half-right since you cannot explicitly ask ChatGPT to make a phishing email for you, as shown in the image below:

Figure 1 – Asking ChatGPT for a Phishing Email

While ChatGPT prevents you from explicitly creating a phishing email, it does not prevent you from creating a simple email asking users to sign into a new employee portal:

Figure 2 – ChatGPT Email

Perfect! With one question, ChatGPT has written us a professional sounding email with no spelling mistakes. ChatGPT can also do all the hard work of making the email look professional with HTML. All we need to do is ask one additional question:

Figure 3 – ChatGPT HTML Email

In less than a minute and two questions, ChatGPT has created a nice phishing template. Now, we can quickly take the HTML code written by ChatGPT and paste it into an email editor to view the email it has created for us:

Figure 4 – ChatGPT Email Sample

This email can then be combined with variety of techniques to capture credentials. One phishing technique an attacker could use is Microsoft device codes, which was covered in more detail in our recent blog post. We can now replace the link in the email with one that leads to a device code page similar to the example below:

Figure 5 – Microsoft Device Code Sample

If a user follows the instructions on this page, then they are sent to the legitimate Microsoft 365 login page:

Figure 6 – Device Code Login

If a user entered the code that was generated for them and then logins into Microsoft 365, the authentication token is sent to us. We can then use their token to access various resources in Microsoft 365. However, this is just one way an attacker could make use of the phishing email template created by ChatGPT.

What Can You Do?

With AI making it much easier for a malicious actor to create a realistic phishing pretext, it also makes it that much harder for defenders. It is no longer enough to tell employees to look for spelling mistakes or weirdly worded emails. That’s why security awareness programs should focus on training employees to identify abnormal requests in these suspicious emails.

To assist with this, employees should be trained on the standard communication channels and authorization/authentication flows throughout the organization, so it is easier to identify abnormalities. Organizations should also have a process and clear instructions in place for how employees can report abnormal emails to the appropriate parties.

Do you have concerns about your users and/or security awareness training programs? Reach out to a member of our DenSecure team to learn how we can help.